Post 5

Summary: Net Neutrality

There are four primary stakeholders in the issue of net neutrality. These four groups are: consumers, Federal Communications Commission (FCC), content providers, and Internet Service Providers (ISPs). Each of these groups holds a different position on the issue and has different beliefs as to why they hold their position.

The first of these four groups is the consumers. Consumers are the everyday people who pay Internet Service Providers for access to the internet. According to several polls, most consumers don’t really understand what net neutrality is. However, once it is explained to them, most consumers seem to support net neutrality. Since net neutrality rules would prevent ISPs from being able to control what parts of the internet we consumers have access to and potentially charge extra for access to specific areas or websites, most consumers evidently support it. We don’t want different tiers of internet access and we certainly don’t want to have to pay more than we already do for internet. There are some, though, that are opposed to net neutrality. This is primarily because these same people are against government regulation as a whole.

The next of these is the Federal Communications Commission. The FCC is “an independent government agency that regulates communication services.” Currently, the FCC is in support of net neutrality. Usually, the government is in support of more government regulation, that way they can keep an eye on things. Because net neutrality laws would give the government more control over the internet, the FCC generally supports these laws. On the other hand, because the FCC is a government agency and government actions change based on which political party is in power at the time, the FCC has changed its opinion on net neutrality a few times.

The third group is that of the content providers. Content providers are companies like Facebook, Amazon, Netflix, and Hulu that provide online content to users. Content providers are generally in support of net neutrality. This support primarily stems from the fact that without net neutrality, ISPs are allowed to charge content providers for the speed and quality of service they provide to consumers trying to access a given company’s online content. For example, an ISP could charge Netflix so that they will deliver video faster to consumers. If video streaming is too slow, people might stop using Netflix.

The last of these groups is the Internet Service Providers themselves. ISPs generally oppose net neutrality because it prevents them from charging more. ISPs, like most companies, want to make money and will try almost anything to make more. A common method is by throttling, “the intentional slowing (or speeding) of an internet service by an internet service provider.” ISPs slow down access to specific websites and then charge either the consumer or the content provider more to speed up this access. With net neutrality rules in place, ISPs would not be able to control the speed at which specific content gets delivered, thus preventing them from making this extraneous charge to its users.

Analysis: Deplatforming

Opponents of deplatforming believe that they have a right to free speech and that forcibly removing one creator’s content from a platform is unjustly limiting their speech. This belief is rooted in a long history of free speech battles in which speech, even hateful or offensive speech, has been protected by courts against many forms of censorship. Such cases go back to the writing of the First Amendment, and its first challenges. The Sedition Act was passed in 1798 with the intent to protect the young United States of America from speech and press that was harmful to national security. The Sedition Act was criticized and eventually eliminated as an “abridgement of the freedom of Speech” specifically prohibited in the First Amendment, regardless of the national security value of the speech. This set a precedent: no one entity, be it corporation or government or individual, should be allowed to say what speech is okay and what speech isn’t. It’s this principle that anti-deplatformers follow, saying that neither Facebook nor Twitter nor anyone else can decide to suppress a particular creator or narrative simply because they disagree with it. Since social media sites have become the de facto public forum, and even news source, for many they have a responsibility to carry all opinions and narratives. To do otherwise would be unduly suppressing speech.

Proponents of the deplatforming of creators of hateful and offensive content fundamentally disagree with anti-deplatformer arguments regarding the duty social media platforms have to their users. Social media platforms such as Facebook, Instagram and Twitter are private corporations. They do not act like governments, nor do they have the same responsibilities as governments. In fact, they define their own social responsibilities according to their mission statement and code of ethics. For instance, Facebook’s mission statement states their goal as giving “people the power to build community and bring the world closer together.” Proponents of deplatforming believe that this constitutes a promise to build a constructive platform where people can talk civilly about important issues, or just catch up with friends and family (as Chris Cox would say). Such discussions are made more difficult or impossible by hate speech and offensive responses from users whose only goal is get clicks and activity, without regard to whether there is a constructive conversation around their content. Moreover, these creators push out more relevant posts from friends and family. Facebook, as a private company, has both a responsibility to the users and a financial incentive to ensure that its platform does not have the sort of content on it that marginalizes a subset of its users. Its users have an interest in pushing to have offensive speech removed, and if a user is only putting out hate speech then they have an interest in removing that user, in much the same way that they have an interest in ignoring friends that are abusive and dysfunctional. In the virtual space, that means that intentionally unconstructive members of the community insist disrupting that community, they have to be removed.

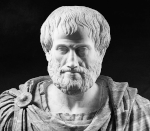

Aristotelian ethics is all about finding the virtuous ‘golden mean’ between two vices. Whether or not something is ethical, then, is where it falls on the spectrum of the two vices. In this case, we have two extremes of never removing content from the site and removing whatever content the social media provider wishes. Respectively these could be considered negligence, or perhaps greed as more content brings more revenue, and censorship or dishonesty. Inherently, we have to consider that there is some content that a person could post that the social media platform must take down. That is, before deplatforming an individual, there must be content that the social media site considers heinous enough to remove. Without much imagination, we can come up with some simple examples such as images of child exploitation, videos of grisly violence, and obscenity. We can then consider an individual who solely uses the social media site to post this type of content. It makes logical sense for the company to ban them after a time since the content they are posting does not belong on the site. Conversely it is clearly unethical to censor benign information like a photo of someone’s salad.

The question then, is when content becomes worthy of removal from the site and when the frequency and/or proportion of that content from some user is significant enough to deplatform them. To Aristotle, the answer is not definitive but requires reflection. There isn’t an ethical gray area, but it is hard to say where exactly the answer lies. In essence, Aristotle would not be against deplatforming, but would need to know exactly what was being posted by the individual to determine whether or not they deserved to be deplatformed. Violence inciting hate speech would likely make the cut, but perhaps pure bigotry would not be considered worthy of deplatforming. In other words, Aristotle is in favor of deplatforming so long as it is done for the right reasons.

Proposition: Echo Chambers

People, in general, tend to congregate socially with like-minded people. However, on the Internet, especially on social media platforms, this natural human tendency is multiplied to the point where no dissenting opinions or different views are allowed, and meaningful discussion is very limited. This effect is known as an echo chamber, and it is so powerful on social media platforms because they ultimately promote it: services such as Facebook, Instagram, and Twitter curate content for their users based on that user’s likes, interests, and friends/followers. We also tend to follow/friend people that share our interests and opinions, which adds to the echo chamber effect significantly. People also tend to flock towards popular content, especially content that is popular within their specific interest group. Online echo chambers can be an issue because so much discourse today is had online, and if people are not discussing differing or opposing viewpoints, then no real progress can be made on important issues that require these difficult discussions.

Deplatforming is when a particular user is or group of users is removed from a social media platform for violating the rules, usually due to hate speech, inciting violence, or harassment. Some recent deplatforming events are Alex Jones and InfoWars, Gavin McInnes and the Proud Boys, Gab, and a variety of other “alt-right” leaders. Alex Jones is the host of InfoWars, a web news channel and website which focuses on conservative news and conspiracy theories. He is infamous for claiming that the Sandy Hook shooting was a hoax, which caused some InfoWars listeners to harass the parents of children that were killed in the shooting. This and other situations of harassment, violence, and misinformation caused social media platforms to ban the website and its cohorts from using their services. Many of these platforms resisted banning Alex Jones for a long time but succumbed to public outcry and later had to defend their decision to not ban him earlier. Another group that was banned in the wake of the Alex Jones deplatforming situation was the Proud Boys and their leader Gavin McInnes, a men’s rights group. They were banned from Twitter due to their involvement in the Unite the Right rally and for violating their policy against “violent extremist groups.” The group was also banned from YouTube for “copyright infringement” but their account was reinstated shortly afterwards. Another interesting example of deplatforming was Cloudflare’s decision to terminate the account of the Daily Stormer, a far-right website. Cloudflare says its decision was motivated by the fact that the Daily Stormer claimed that Cloudflare was secretly a supporter of their ideology, but they also say that their decision could be dangerous because of the threat deplatforming potentially poses to the ideal of a free and open Internet.

Deplatforming could affect online echo chambers because, brought to an extreme, users with differing viewpoints or opinions from the content providers that own the platform would be removed, which further exacerbates the effect we currently see where no meaningful discussion occurs. Social media platforms, as mentioned earlier, are where most of this public discourse takes place, and exacerbating these echo chambers will only further divide us politically and socially. However, some of these users are actually inciting violence or spreading misinformation, such as in Alex Jones’ case and the Sandy Hook school shooting, and perhaps they should be removed from these platforms in the interest of public safety. It is also difficult to ascertain, from a technology standpoint, between those who are speaking ironically or figuratively and those who are making actually credible threats. Also, on the other hand, companies have their own freedom of speech rights and can ban people for violating their terms of service without any legal repercussions. The real issue is if companies should be held to this standard of maintaining free speech. Aristotle would believe they should.This is the balance that must be struck when deplatforming: free speech vs. communal safety. Aristotle would argue that a golden mean must be found between these two extremes, and both completely free speech and no free speech in the interest of safety are not virtuous. Therefore we, as engineers, must try to find this balance, which will not be simply a technological challenge.